Homelab Journey – The Why?!

Like many people in IT Infrastructure-land, having access to a lab environment is extremely valuable. In my career, having access to a notable Enterprise-level infrastructure had been available for me to carve a chunk out of periodically… and it sufficed. Though, I am not in that environment any more and the needs for lab have really grown. Earlier in 2020, I hit the breaking point wherein VMware Workstation on my corporate laptop was not going to cut it any longer. Time to break out the checkbook.

But, what did I want? NUCs look cool, but feel expensive for what they are. Mac Mini, similar to that. Supermicro had a compelling size and entry point to Xeon, but without a full-on rack, I felt a little limited in future options.

It took a little while, but I got to the point where it hit me like a pile of bricks… WHY do I want a lab? Suddenly, things became more clear. I didn’t need to let popular/common options drive my decision. Rather, just like we should do with our own environments and customers, identify the use case, define the requirements, and design around those requirements. Seems silly how long it took to get to that point… but, we’re here now.

So… what did I want to do with my lab? How am I going to use it? WHY do I want a lab?

- Gain experience with vSAN storage (solution and components)

- Gain experience with GPU-based workloads (ex: running OpenAI workloads)

- Gain experience with infrastructure automation solutions (ex: vRealize Automation, Terraform, Ansible, etc…)

- Gain experience with high-speed networking

- Support activities with the TAM Lab program within the VMware TAM Services organization

- Don’t paint me into a corner. Make sure the solution is flexible enough to meet my needs, whatever they are going forward.

How would I do this?

- Leverage vSphere 7.0

- Leverage a GPU for CUDA-based workloads

- All-flash vSAN configuration

- High-speed networking between nodes

So, now that I know what I want it to do, how do I design it? Some design considerations for my homelab were:

- I wanted consistency for all of the nodes in my environment. No snowflakes (well… as much as possible).

- Expansion options available (think PCI slots, storage headers, etc…)

- Appropriate cost for the environment. Money is a design consideration, to be sure. Don’t cheap out, but understand the cost/value relationship for the components.

- vSAN eats up memory on ESXi hosts. Ensure there is enough memory available on the hosts for vSAN to operate as designed while running the proper workloads.

- Leverage low cost/freely available infrastructure services where appropriate.

- This is not because I dislike Microsoft (far from it). However, it goes back to cost of the environment. The licensing to properly run infrastructure services (DNS, certificate authority, etc…) can be overwhelming.

- My network needs really boil down to routing across multiple VLANs in the environment and Jumbo Frame support. I’m not massively interested in notable enterprise networking solutions (ex: Cisco). Fiber-based networking with copper uplink to existing network met a price and functionality point that was quite agreeable. Again… don’t over do it.

- The lab would be deployed in a basement storage area. So, heat and sound were interesting, but it would be OK if the solution put off heat and had a notable sound profile.

With all of that above, I felt confident that the solution I come up with should be able to meet my needs! So… what did I end up with?

3 Nodes. Each node with the following components:

| Component | Quantity | Solution | Comment |

| Processor | 1 | AMD Ryzen 5 3600 | 6 core; 3.6 Ghz (boost 4.2 Ghz) |

| RAM | 4 | 16GB (64GB in total) | |

| Motherboard | 1 | Gigabyte X570UD | 5 PCIe slots 4 DIMMs |

| Video Card | 1 | MSI GeForce GT 710 | Used for host console functions. On-board video options not functional due to Ryzen architeture (need Ryzen APU for this). |

| Video Card | 1 | Nvidia Quadro K2200 | SNOWFLAKE! Only used for a single node. Not every node has a GPU. |

| Hypervisor Storage | 1 | Sandisk 64GB Cruzer Fit | Hypervisor installation |

| vSAN Storage | 1 | WD Blue 3D NAND 1TB SSD | vSAN Storage Tier |

| vSAN Storage | 1 | WD Blue 3D NAND 250GB M.2 2280 | vSAN Cache Tier |

| 10Gb Networking | 1 | Intel X520-DA2 | 2 x 10Gb SFP+ |

| 10Gb Networking | 1 | 10Gtek DAC | Support for Mikrotik |

| Case | 1 | Fractal Design Focus Case | AXT Mid Tower |

| Power Supply | 1 | Corsair CV Serices CV450 | Bronze Certified |

Plus a MikroTik CRS305-1G-4S+IN 5-port 10Gb SFP+ switch for the environment. This little switch includes some additional L2/L3 functions above and beyond my current use-cases that may prove to be useful in the future.

At the end of the day, I let the WHY drive the decision making process. The WHY focused my efforts towards a more intentional and purposeful implementation versus trying to fit the mold of what everyone else was doing. The WHY led me to a roll-your-own deployment that meets my needs now and allows me to pivot in the future.

Are there things I am missing? Certainly… I don’t have out of band management and IPMI. But, I am fine with those things. I have what I need for my homelab because I know WHY I have it.

If you are looking into building your own homelab environment… do youself a favor and really think about WHY you want one. If you start with the WHY, you’re going down a good path!

Happy homelabbing!

Enabling AMD Ryzen Virtualization Functions

As part of my homelab environment, I am leveraging AMD Ryzen 5 CPUs for the processors. There are a myriad of reasons I went with AMD over Intel (discussion over vBeers at this point), but I have been really happy to this point.

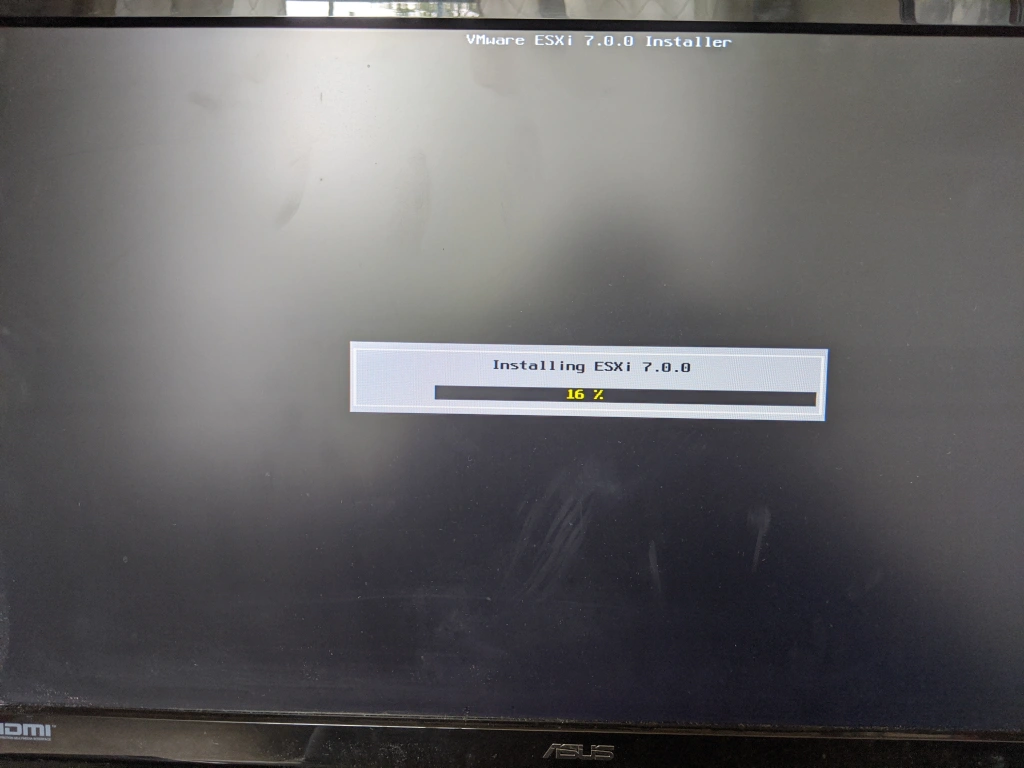

As part of setting up my lab servers, I put everything together and tried to install vSphere 7.0! Come to find out that the virtualization capabilities were not enabled.

I am using a Gigabyte X570UD motherboard in each of the hosts. So, I’ll be running through the BIOS on that platform.

The virtualization functions for AMD Ryzen are provided by the SVM Mode. By enabling SVM Mode, additional CPU capabilities that allow ESXi to do its magic are available. You can check out the gallery below to walk through how to enable on the Gigabyte X570UD motherboard. If you don’t have this specific motherboard, look for the SVM mode in the BIOS.

And… with that… I was back in business!

Ryzen processors are not the traditional processor selection for virtualization environments… but, they are gaining ground. Ryzen seems to be targeted towards desktop processing and gaming needs… which they do well at. But, the clock speed, core availability, and price are really compelling for homelabbers, such as myself. It doesn’t surprise me if Gigabyte didn’t enable the features by default as this is not the targeted usecase… but, I am happy SVM mode is a couple of BIOS menus away!

Happy homelabbing!

vSphere 6.7 – vCSA and PSC CLI Deployment Template and Size Gotcha

I’ve been spending some time trying to automate the various components that I use in my homelab. Right now, I’m focusing on some VMUG content development.

As part of the effort, I wanted to leverage the vCSA CLI installer for vSphere 6.7 to spit out some automated PSC and vCenter goodness. In running through this, I ran into a pretty vague gotcha. While I know vSphere 7 is fresh off the presses, I thought I’d at least call out the gotcha for the vast majority of people out there still on the v6.5/v6.7 family.

When deploying vCSA and PSC appliances from the CLI, a number of .JSON templates are available for you to reference. Simply copy the contents, change some of the required fields (hostname, password, IP address, etc…), and provide the .JSON file to the installer. It’s actually pretty slick. And, once you get everything configured properly… Poof, you’re an infrastructure as code Wizard!

You can find the templates at [VCSA .ISO]/vcsa-cli-installer/templates

In the various templates, you will see the following code:

"appliance": {

"__comments": [

"You must provide the 'deployment_option' key with a value, which will affect the VCSA's configuration parameters, such as the VCSA's number of vCPUs, the memory size, the storage size, and the maximum numbers of ESXi hosts and VMs which can be managed. For a list of acceptable values, run the supported deployment sizes help, i.e. vcsa-deploy --supported-deployment-sizes"

],

"thin_disk_mode": true,

"deployment_option": "infrastructure",

"name": "Platform-Services-Controller"

},Here, you will find two pieces of interesting information:

- deployment_option

- vcsa-deploy –supported-deployment-sizes

The deployment_option line allows you to specify the resource allocation for the VCSA or PSC. However, it is not clear what those values really are. This is where vcsa-deploy –supported-deployment-sizes comes in. When you run the command from the CLI, you are presented with a name along with the vCPU, Memory (GB), Storage (GB), Hosts it can manage, and VMs it can manage. But… there’s a little gap. A gap that cost me some hours in troubleshooting.

Neither the templates, deployment_option comment, or the vcsa-deploy –supported-deployment-sizes utility maps the options to the roles of VCSA or PSC.

Let’s take a look at the output from the vcsa-deploy –supported-deployment-sizes command:

As this is a home lab, I want to use the smallest amount of resources necessary to be successful. In this instance, I am developing the content for my Fun with PSCs and vCenters VMUG session. The design of the infrastructure for the session is pretty simple. 3 external PSCs and 1 vCenter. They are not going to be managing ESXi hosts or VMs. Pretty boring for the life of a VCSA and PSC… but, just right for what I need.

In reviewing the Options of the supported deployment sizes, I decided that infrastructure is the way to go. Keeping each VM to 2 vCPU, 4GB RAM, and 60GB disk space means I can minimize the impact to everything else running in the lab. SCORE!

For the PSCs, this worked great!

"appliance": {

"__comments": [

"You must provide the 'deployment_option' key with a value, which will affect the VCSA's configuration parameters, such as the VCSA's number of vCPUs, the memory size, the storage size, and the maximum numbers of ESXi hosts and VMs which can be managed. For a list of acceptable values, run the supported deployment sizes help, i.e. vcsa-deploy --supported-deployment-sizes"

],

"thin_disk_mode": true,

"deployment_option": "infrastructure",

"name": "FUNPSC01"I was able to leverage the provided templates to create two PSC-based environments. One with 2 PSCs in repliaction with each other. Another as a standalone PSC. Pretty simple. Very awesome!

However, I started running into issues with the VCSA template.

FUNVCENTER_on_labesx01.vb.info.json. Error: Unexpected template key.

Cause: The key 'platform_services_controller', under the parent key 'sso',

cannot be found from the install psc schema. Resolution: You must make sure the

template only uses the keys allowed for the install psc deployment type.

================ [FAILED] Task: StructureValidationTask: Executing Template

Structure Validation task execution failed at 16:16:23 ================

================================================================================

Error message: Error: Unexpected template key. Cause: The key

'platform_services_controller', under the parent key 'sso', cannot be found from the install psc schema.Odd… I’m not installing a PSC.

From this point, I started down a ton of rabbit holes.

- Am I using the right template?

- Is “platform_services_controller” spelled correctly in the .JSON file?

- Let me check the template schemas to see what is allowed

- Is there a bug with the scripts in the VCSA 6.7 U3 deployment scripts?

- …

It took a little time away from the keyboard to revaluate the situation and reset. I know that vCenter has different demands from that of the PSC… and PSCs don’t directly manage VMs. So, a PSC with no Hosts/VMs to manage is cool. But, maybe not for vCenter. So, I tried tiny as an option.

This time, there was a different error… making progress!

Template structure validation failed for template

FUNVCENTER_on_labesx01.vb.info_for_blog.json. Error: Unexpected template key.

Cause: The key 'platform_services_controller', under the parent key 'sso',

cannot be found from the install embedded schema. Resolution: You must make sure the template only uses the keys allowed for the install embedded deployment type.

================ [FAILED] Task: StructureValidationTask: Executing Template

Structure Validation task execution failed at 16:23:55 ================

================================================================================

Error message: Error: Unexpected template key. Cause: The key

'platform_services_controller', under the parent key 'sso', cannot be found from the install embedded schema.This time, I was told there was an error when trying to install the embedded deployment type. If you scroll back above, you’ll see that the first time, there was an error when trying to install a psc. So, the option specified in the deployment_type section is a key decision maker in which template schema to use and what is going to be deployed. It’s not just for resources!

As tiny was not working, but it got me towards a vCenter-like configuration (VCSA with embedded PSC as implied by embedded in the error), I decided to try management-tiny.

[START] Start executing Task: To validate the template structure against the rules specified by a corresponding template schema. at 17:57:55

Template structure validation for template

'FUNVCENTER_on_labesx01.vb.info.json' succeeded.

Structure validation for all templates succeeded.

[SUCCEEDED] Successfully executed Task 'StructureValidationTask: Executing

Template Structure Validation task' in TaskFlow 'template_validation' at

17:57:55Woohoo! At this point, I was able to see the logic.

"appliance": {

"__comments": [

"You must provide the 'deployment_option' key with a value, which will affect the VCSA's configuration parameters, such as the VCSA's number of vCPUs, the memory size, the storage size, and the maximum numbers of ESXi hosts and VMs which can be managed. For a list of acceptable values, run the supported deployment sizes help, i.e. vcsa-deploy --supported-deployment-sizes"

],

"thin_disk_mode": true,

"deployment_option": "management-tiny",

"name": "funvcenter"

},Infrastructure: PSCs

Management-*: VCSA only (needs external PSC)

Tiny, Small, Medium, Large, Xlarge: Embedded VCSA and PSC

Seems a bit silly that the resolution for this was simply trying another category. The error message really had me questioning the format of the .JSON file… not the selection of the proper deployment_option. However, luckily, I’m past it and I hope you are too! 🙂

Good luck with automating the deployment of vSphere 6.7 PSCs and VCSAs!

VMUG Sessions – 2020!

One of my goals for 2020 was to present at a VMUG conference. It’s been way too long (since I co-ran the Portland VMUG) since I developed sessions and presented at a VMUG… so, I thought, why not try again!?

I submitted a handful of session ideas for consideration in 2020 and crossed my fingers. Next thing I know, I have been asked to present for 3 VMUGS!

- Seattle VMUG Virtual UserCon – May 14, 2020 – Register Here

- Wisconsin VMUG – Sept 15, 2020 – Register Here

- Denver VMUG – Postponed (awaiting reschedule) – More Info Here

I hope you’re able to join me at one of these events… cheer me on, heckle, ask questions, and learn a TON!

VCP6-NV Network Virtualization Exam Prep And Results

Hard to believe that a mere 8 hours so, I sat for the VCP6-NV (2V0-642) exam. 77 questions and about 60 minutes later, I walked out as a newly minted VCP!

Truth be told, I have not needed to study like this for quite some time… likely while getting my undergrad many years ago. The type of learning I adapted to in the real world was more bursty, need driven, and broad. So, I really needed to get to clean the rust off those old studyin’ routines and get to work.

The Internets were massively helpful in not only helping identify what to study but, also, confirming what I thought would be the correct content. This post is my way of paying back for the help I got. If you’re here for the actual test questions and answers, you’re in the wrong place…

Please keep in mind that this is not meant to be prescriptive. Rather, this is what worked for the type of learner I am.

What did I use to study?!

There are some awesome content creators out there with amazing reviews and success stories (Pluralsight, vBrownbags, etc…). I did not use them. I felt like trying to focus on the information from VMware would be the most effective use of my time.

- NSX: Install, Manage, and Configure [6.2] – On Demand

- VMware Education NSX Practice Exam

- NSX 6.2 – Admin Guide

- NSX 6.2 – Design Guide

- VMware Learning Zone – NSX Exam Prep

- VCP6-NV Exam Blueprint

- Hands on Labs

The ICM course’s On Demand structure worked really well. I was concerned about my usual preferred learning style conflicting with the presentation and lab format of the course. However, it was quite nice and I rather enjoyed it. I completed all of the course in about 1.5 weeks… and I have a crazy amount of notes to show for it. Note: if you decide to go through the On Demand course, there are some oddities about the delivery system that you can work 2V0-641 to your benefit. Not listening to the robo-voice reading each slide was a sanity saver.

The Design Guide was surprisingly enjoyable… It has been composed in a very thoughtful and logical manner. It needs to be read from cover to cover at least one time as the pages and sections build on top of each other. I found myself re-reading chapters 3 & 5 to help drive some concepts home. Any time spent with the Design Guide was time well spent.

The Learning Zone exam prep content was really nice. Each objective and sub-objective is presented is short 5-10 minute videos. They cover the content in ways that are explanatory, show correct logic in analysis, but don’t give you the answer. They guide you to the water… But, you need to drink it.

What didn’t I do?

- Use external content providers – I felt like I had a good grasp on the concepts from the VMware materials. The external content providers would help explain and/or make sense of concepts that I was getting pretty well.

- Did not focus on speeds and feeds – Yes… knowing easily referable information like the amount of RAM and vCPU for NSX Manager is within scope of the exam. I can look that up if I need to… And I accepted that I may miss those questions on the exam. My time was more important elsewhere.

- Did not memorize details of UI paths – Again, knowing which tab or right-click option is within the scope of the exam and not worth my time. Accepted risk.

Test Day

- Did not study at all. At this point, I knew what I was going to know and spending time on last minute things do not yield anything but uncertainty.

- I felt good about the test… Like I did in college… The rust was off the gears! I was calm and accepted the current state of my study and learning as it was.

Tips/Tricks

- Pay attention – very common concepts, themes, principles, rules, restrictions, limits, etc… show up over and over and over.

- Write – our minds retain information better when we write. Writing engages an artistic portion of our brains… and information associated with artistic activities is retained better.

- Schedule the exam – or else you will find a reason to start kicking the can and delaying the prep work

- Exam structure is no surprise – single choice, multiple choice, sometimes answer options are super similar, sometimes answers are super obvious. There is nothing exotic here.

- Question wording / Answer wording – NSX, traditional networking, and network virtualization have similar verbiage, differing implications, and concepts both shared and unique. Consider the context of the question and don’t make assumptions without considering the environment.

- Have fun! – if you can enjoy the process and the test, you will be calmer, more confident, and have a clear thought process.

- NSX is not just L2 overlay – be sure to understand the purpose, mechanics, workflows, and other concepts for the other functions of NSX.

- Pay attention!!! – Did I mention that already. If I were only allowed to give one piece of advice, this would be it.

Bill’s Take

This was a really enjoyable process for me. I got to do something I have not done for quite a while. Plus, I ended up on the passing side of the exam, which does not hurt.

The exam felt appropriate to the level of studying required. It it’s very likely that I could have studied certain areas a little more and gotten a higher score. But, I felt like I had a solid hold on the subject matter, so no need to push it.

After going through the learning process for VCP6-NV, I feel like there is value in this certification process. Yes… I recognize that people have differing opinions on certification… and this is mine. Network Virtualization is not a commodity knowledge set like other technology topics may be. The range of NSX specific info, network architecture, and network concepts feel like a good evaluation of a necessary skill set versus a test on a specific product.

Good luck studying and don’t forget to PAY ATTENTION!!

Fixed Block vs Variable Block Deduplication – A Quick Primer

Deduplication technology is quickly becoming the new hotness in the IT industry. Previously, deduplication was delegated to secondary storage tiers as the controller could not always keep up with the storage IO demand. These devices were designed to handle streams of data in and out versus random IO that may show up on primary storage devices. Heck… deduplication has been around in email environments for some time. Just not in the same form we are seeing it today.

However, deduplication is slowly sneaking into new areas of IT… and we are seeing more and more benefit elsewhere. Backup clients, backup servers, primary storage, and who-knows-where in the future.

As deduplication is being deployed across the IT world, the technology continues to advance and become quicker and more efficient. So, in order to try and stay on top of your game, knowing a little about the techniques for deduplication may add another tool in your tool belt and allow you to make a better decision for your company/clients.

Deduplication is accomplished by sharing common blocks of data on storage environments and only storing the changes to the data versus storing a copy of the data AGAIN! This allows for some significant storage savings… especially when you consider that many of file changes are minor adjustments versus major data loads (at least as far as corporate IT user data).

So, how is this magic accomplished? – Great question, I am glad you asked! Enter Fixed Block deduplication and Variable Block deduplication…

Fixed Block deduplication involves determining a block size and segmenting files/data into those block sizes. Then, those blocks are what are stored in the storage subsystem.

Variable Block deduplication involves using algorithms to determine a variable block size. The data is split based on the algorithm’s determination. Then, those blocks are stored in the subsystem.

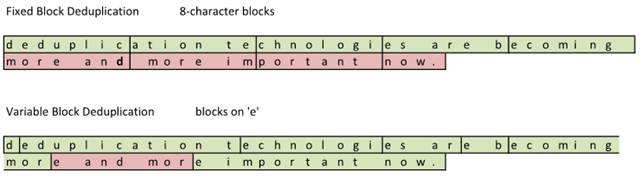

Check out the following example based on the following sentence: “deduplication technologies are becoming more an more important now.”

Notice how the variable block deduplication has some funky block sizes. While this does not look too efficient compared to fixed block, check out what happens when I make a correction to the sentence. Oops… it looks like I used ‘an’ when it should have been ‘and’. Time to change the file: “deduplication technologies are becoming more and more important now.” File –> Save

After the file was changed and deduplicated, this is what the storage subsystem saw:

The red sections represent the changed blocks that have changed. By adding a single character in the sentence, a ‘d’, the sentence length shifted and more blocks suddenly changed. The Fixed Block solution saw 4 out of 9 blocks changed. The Variable Block solution saw 1 out of 9 blocks changed. Variable block deduplication ends up providing a higher storage density.

Now, if you determine you have something doing fixed block deduplication, don’t go and return it right now. It probably rocks and you are definitely seeing value in what you have. However, if you are in the market for something that deduplicates data, it is not going to hurt to ask the vendor if they use fixed block or variable block deduplication. You should find that you get better density and maximize your storage purchase even more.

Happy storing!

Cloud Crossroads

I feel like I am at a crossroads… and trying to figure out which direction to go. In my life, I strive to know about all kinds of things. Heck, in college, I went through 4-5 different majors because I was so interested in all of them. Computer Science and a Biology minor won out. So, when I come to this crossroad, I am torn… Which Cloud to go with?

The term “Cloud” is really confusing some times. While the basic concept is becoming more and more clear, what is not is that we have multiple Cloud types to contend with.

1) Datacenter Cloud – This makes perfect sense to me. My VMware experience and all of the VMware/virtualization kool-aid out there jives very well. The Datacenter Cloud is just like what I have in my datacenter. Just with some added layers of management and automation on top. I am cool with that.

2) Application Cloud – This is where I am getting lost… and, I feel like this may be where things are going, especially for environments sized like my Corporate environment. Application Clouds include Google Gmail, Salesforce Database.com, Google Docs, VMware/Salesforce VMforce, and Windows Live.

In my Corporate environment, we are trying to make a conscious decision to move towards Cloud based resources. We figure that if we can simplify the internal infrastructure to commodity components and start leveraging usage based hosted models, we can actually reap some of the benefits. Starting to acknowledge the trend now and make decisions based on the trend makes it easier to grow into a “Cloud” environment.

So, back to this darn crossroads… Datacenter Cloud or Application Cloud??

The biggest issue I am running into is my data in the Application Cloud. Like most applications, all of our applications need the app tier and the data tier. In the Application Cloud model, the database lives in one location and the app in another. Suddenly, not only do I need to worry about access times and experience for the end user getting to the application, but the access times between the various Cloud providers. AKA – things could be significantly slower.

Additionally, what about backing up the data and accessing those backups. We may have documented policies stating retention values, locations, etc… We all know that song and dance. However, each individual component theoretically operates individually.

Security become another issue to address with the Application Cloud environment. I “trust” that my data is secure. However, I am addressing security as credentials. Each service has their own authentication system. So, how do we, as IT professionals, manage these? Existing solutions provide for their own management structure (aka – web console for administration and creation of user-level accounts) or use agents that run on workstations for a pseudo-single sign-on experience. But, what I am looking for is some level of integration between my existing authentication mechanisms and what exists in the Cloud.

One of the final speedbumps in this Cloud crossroad conundrum is how can we ensure that our data is being backed up reliably and that restoration mechanisms are timely and accessible via my company versus needing to hunt down a Cloud provider support person? Many companies have regulations and policies regarding data retention and many Cloud providers cannot deal with those policies. Plus, the business may need to “feel in control” of their data.

Alright… the light is turning green… which way… WHICH WAY…???

I know, I have this awesome SUV, I am going to make my own path. Instead of left or right, I am going to forge straight ahead. With the direction we need to go, we cannot just chose one or another. There are too many advantages for both to ignore them… For those systems with their own authentication methods regardless of being hosted or internal, to the Application cloud with you! For those that we deem important to have more control over, Datacenter Cloud for you!

As long as we make a conscious decision to move towards some kind of Cloud based solution (be it Application Cloud or Datacenter Cloud), we are moving in the right direction. I feel confident that I am not the only one in the IT world with these concerns and the answers will come in good time. By moving towards Cloud infrastructure now, we can adapt when the technology advances and be more agile and lite. The development of policies that handle external authentication systems and data access (backups/recoveries/SLAs/etc…) and business buy in (perhaps with ROI and cost savings over alternatives) will help drive this path home… and perhaps the business will pay to pave this new road I am blazing. Otherwise, these darn bumps are going to kill me.

Happy Clouding!